I’m originally from New Hampshire but moved to Los Angeles with my wife Stephanie and three kids in 2015 when I started working at NASA’s Jet Propulsion Laboratory as a science apps and data interaction engineer.

I got my undergrad at University of Massachusetts Lowell after I got out of the Marines and a master’s degree in computer science at Rivier University. My current projects include the Data Analysis Tool on the

NASA Sea Level Change Portal, the Orbit Viewer visualization for the

Center for Near Earth Object Studies,

image processing for the Cassini mission, along with a few others.

My interest in making this kind of content started when I was completing my master’s degree and wrote software that created digital terrain models. It forced me to learn computer graphics as I built in a software renderer. I then moved to

general space graphics using WebGL and later Blender.

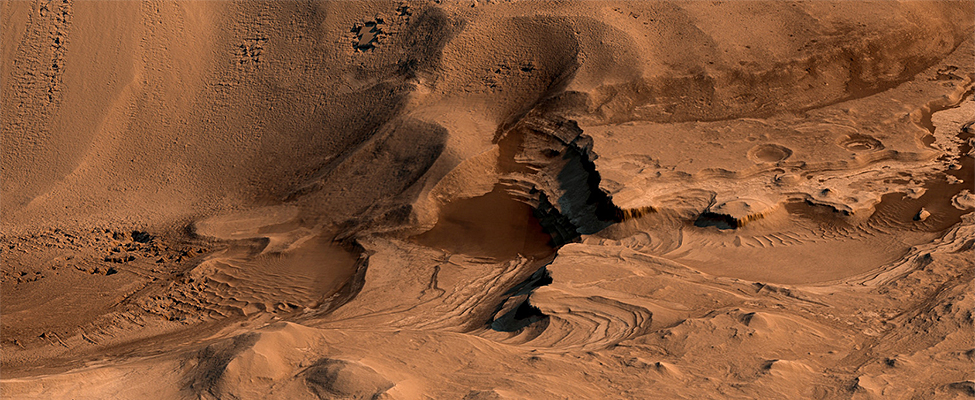

(Above: Light-toned layering in Labyrinthus Noctis Pit, rendered using Autodesk Maya and Adobe Lightroom. HiRISE data processed using gdal.PSP_003910_1685)

My first move into true science data was assembling raw HiRISE data of the Cydonia Region of Mars and then Cassini, Galileo, and Voyager image processing. I’m not sure what

got me back to working with the HiRISE DTM data, but I think I may have been trying to create views of Mars as MSL Curiosity may see them. Then that line of artwork sort of took on a life of its own.

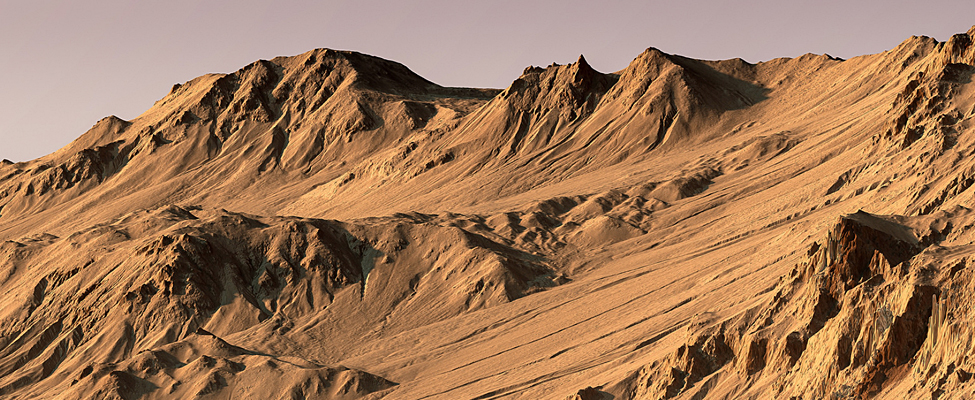

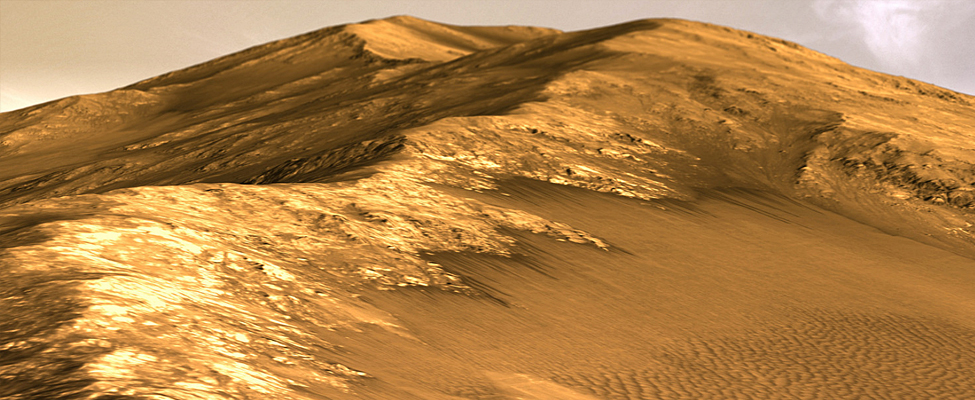

(Above: Light-toned gully materials on Hale Crater wall, rendered using Autodesk Maya and Adobe Lightroom. HiRISE data processed using gdal. PSP_002932_1445)

My workflow for these images are pretty specific to HiRISE modeling, though it works for other DTM-based scenes. I use the gdal toolset and Adobe Photoshop for converting the DTM and orthoimagery

data into useful inputs for rendering.

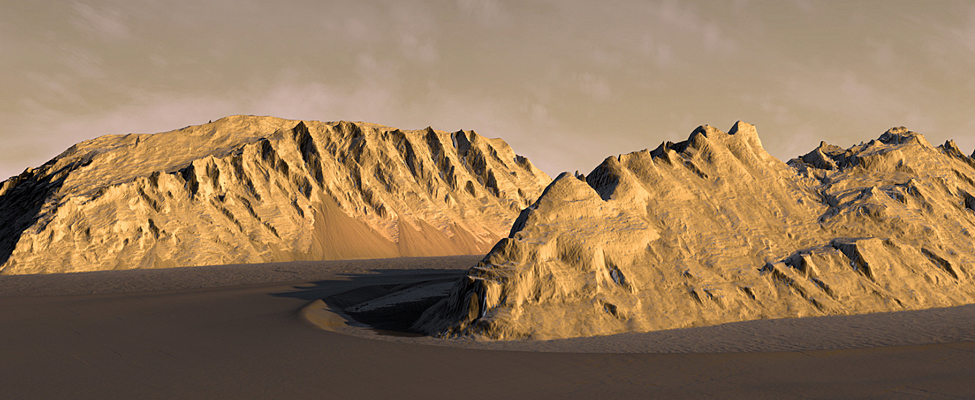

(Above: Light-toned mounds in Ganges Chasma, rendered using HiRISE DTM data in Autodesk Maya and Adobe Photoshop.ESP_017173_1715)

These inputs include converting the DTM IMG files to a displacement map (indicating terrain height). The orthoimage is also converted from the JP2 format to a TIFF. If I’m modeling a single dataset,

I can generally keep the data at their full resolution. If I combine datasets from additional observations, I’ll usually need to lower the resolution to keep within the memory and performance limitations of my computer.

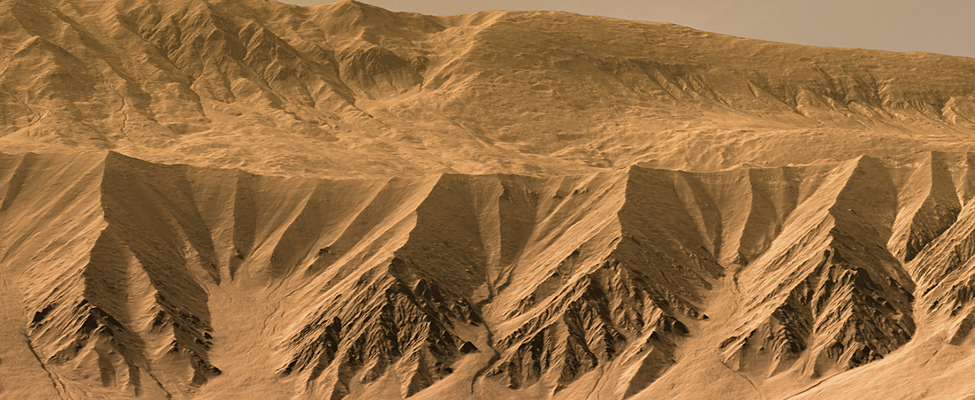

(Above: Gasa Crater, Mars, rendered using Autodesk Maya and Adobe Lightroom. ESP_014081_1440)

To match or exaggerate elevations in the model, I have a spreadsheet that calculates displacement ratios using input image dimensions, altimetry range, and pixel scale. The model is created in Autodesk Maya where

I set up the mesh, camera, sky, and lighting. I can do clouds by either a volume rendered object in Maya or by overlaying the rendered scene on an actual photograph. The rendered image will then be brought

into Adobe Lightroom for final processing.

(Above: Slopes along a Coprates Chasma ridge, rendered using Autodesk Maya and Adobe Lightroom. HiRISE data processed using HiView and gdal. ESP_034619_1670)

Kevin’s Flickr album of Mars images from HiRISE data.

If you have a story to tell about how you use HiRISE data, drop us a line:

madewithhirise (at) uahirise.org